Also, you can find my book on building an application security program on Amazon or Manning

I’ve spoken before about AI (more specifically LLMs) being used in software development to enhance development. Things are obviously advancing quickly in the AI space as workflows are quickly being handed over to AI to perform. This can be excellent news when it comes to gaining efficiencies, but it can also be concerning for those that are worried about their jobs. Of course, AI will be taking away work and filling in gaps in resources, this is inevitable.

However, we can also harness the power of AI to do more than write emails, create images, or help us plan parties. There is another approach that uses AI agents to drive security throughout an organization and it’s helping to redefine what’s possible when we remove human processing limitations as the bottleneck. Today, these AI agents many can intelligently automate workflows or be used to run entire security operations.

What Makes an AI Agent Different from “Just AI”

The distinction matters. Your typical AI security tool takes input, processes it, and gives you output. An agent operates in a ecosystem: it perceives its environment through sensors (APIs, log streams, config files), makes decisions using models and logic, and takes actions throughout the ecosystem, modifying the environment. It then repeats this while continuously learning and adapting.

More specifically, here’s what defines an AI agent in a security context:

Autonomy: The agent operates without human intervention. It doesn’t wait to be told to scan for secrets or check for misconfigurations, it continuously monitors and acts based on triggers and conditions you’ve defined.

Sensors and Action: Agents interact with your environment through APIs, webhooks, file systems, and cloud SDKs. They pull data from SIEMs, ticketing systems, CI/CD pipelines, and cloud control planes. More importantly, they can modify that environment when needed to maintain a security posture. Rotating credentials, blocking deployments, updating firewall rules, or triggering incident response workflows.

Decision Logic and Models: Behind the scenes, agents use LLMs for natural language understanding, machine learning for pattern recognition, and rule engines for policy enforcement. This intelligence helps the agent not just understand context, but also helps it to know when to escalate, when to auto-remediate, and when to stand down.

Communication and Coordination: As with humans, agents work better together. Modern security agent architectures use event-driven messaging (CloudEvents), streaming RPCs (gRPC) for microsecond-latency decisions, and message queues (Kafka, Redis Streams) for coordinating responses across distributed systems. A vulnerability scanner agent can alert a posture management agent, which triggers a policy enforcement agent, which blocks a deployment. All without human intervention.

The Force Multiplier Reality

To see how impactful agents can be in an organization one only has to look at the average enterprise security team that deals with tens of thousands of alerts daily. Utilizing AI-powered threat detection and response, organizations can often see a dramatic reduction in breach detection time as well as reduced false positives compared to rule-based systems. We’ve spent the last few decades working on automating as much as possible. AI puts automation on steroids by allowing for the investigation of large data sets, parallel workflows, and response times faster than a human.

Simply put: alert volume scales linearly with infrastructure growth, but human processing capacity doesn’t. Agents can change that.

Where AI Agents Excel Right Now

While the opportunities to apply agents in a security program abound, here are a few examples.

Behavioral Analytics for Modern Attack Vectors

Agents can baseline normal API usage patterns across an entire infrastructure. When someone starts making unusual calls to a cloud management APIs at 3 AM from a new location, agents can correlate this across network logs, identity systems, and threat intelligence feeds. With the traditional approach of rules-based detection would likely generate dozens of uncorrelated alerts forcing a human to review and prioritize. Agent-based systems can instead deliver a single, prioritized threat assessment with recommended actions.

Pipeline Security: Securing the SDLC

DevSecOps has a coordination problem. Your SAST tool finds vulnerabilities, your DAST tool finds different ones, your SCA tool flags dependencies, and your container scanner identifies insecure base images. Traditionally, developers see this as a wall of noise blocking their deployments. While tools like ASPM are designed to provide better coordination across different tools, agents take that to a different level.

Testing agents can share results via event-driven communication, eliminating redundant scans. Coordinated agents can run parallel security, compliance, and container scans without blocking developer commits. Triage agents trained on historical vulnerability data and an organization’s risk posture can cut down on the noise and prioritize issues in the proper context.

Credential Hunters: Advanced Secrets Detection

Our typical secrets scanning tools use regex patterns and entropy analysis to flag potential credentials. Predictably, this can generate massive numbers of false positives. But by leveraging a credential hunting agent, it can not only detect but also validate whether the credentials are truly exploitable.

For instance if an agent flags a potential AWS access key, service-specific detectors test it against AWS APIs to confirm it’s valid before alerting your team. This reduces false positives by 89% compared to pattern matching alone. Additionally, a repo scanning agent can analyze git history and container layers to uncover long-lived secrets in legacy backups.

Consider an agent that finds a viable secret in a configuration file checked in to GitHub. When confirmed, a secrets lifecycle agent can automatically trigger rotation via your secrets manager (HashiCorp Vault, AWS Secrets Manager), update Kubernetes secrets, and redeploy affected services.

Zero-Trust Policy Decision Points

Zero-Trust is still one of the most effective methods of security defense against adversarial compromise. While much of zero-trust relies on static permissions mixed with behavioral context, agents can bring us closer to true continuous evaluation. Policy evaluation agents can track user sessions and enforce never-trust assumptions for every access request. Risk assessment agents can combine identity data, endpoint posture, network context, and threat intelligence to generate composite risk scores in real time.

In the case where the organizations threat intelligence workflows flag a new credential-stuffing campaign, policy adaptation agents automatically tighten access controls for affected user populations and trigger re-authentication without manual intervention.

From Policy Documents to Executable Code: Making Security Real

I spent some time thinking about a problem space that was ripe for the application of AI. I was recently reviewing security policy documents for alignment to industry standards and frameworks. The truth is that in many organizations there exists beautifully written security policies sitting in SharePoint that are static documents. They’re provided to auditors, they’re reviewed periodically, and they’re used to measure and define technical and business decisions. But these are largely manual efforts done by humans. This can lead to the organizations actual security posture bearing only a passing resemblance to those written policies.

And the issue isn’t commitment, it’s the gap and interpretation of the documentation with the enforcement. Writing “Users must authenticate with multi-factor authentication” in a policy document doesn’t make MFA happen. Traditional approaches require security teams to manually translate policy requirements into firewall rules, identity provider configurations, and CI/CD pipeline checks. This can be a slow process.

Policy-as-Code: The Missing Link

What if a written security policy could enforce itself through OPA (Open Policy Agent)? The way I thought about this problem was:

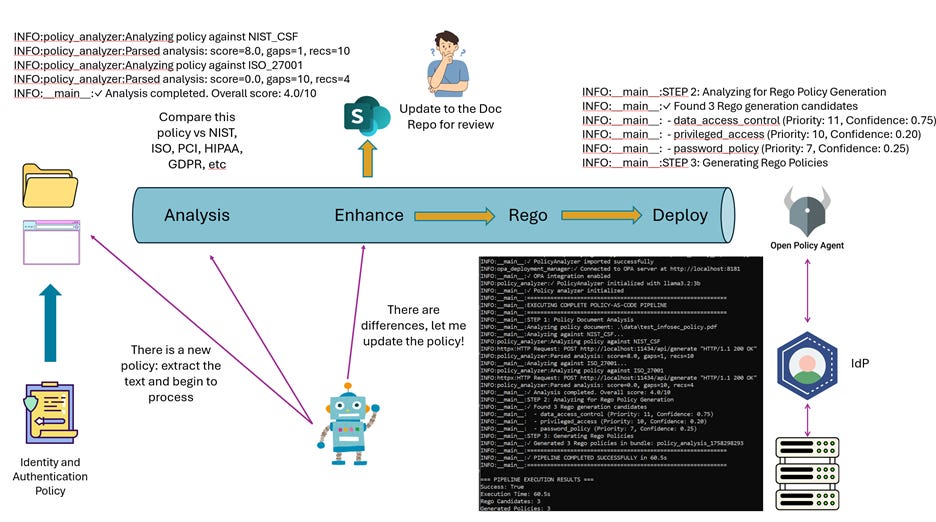

“What if I could take a policy from an organization, match it against industry standards, update the policy, and codify it for OPA?”

Turns out, it’s completely doable. An AI agents can be used to automate the translation from written policy to executable enforcement in moments.

Here’s the architecture that makes this work:

Step 1: Intelligent Policy Analysis

An AI agent can monitor your policy document repository and watch for changes, or updates. It will ingests your security policy document, extract the actual requirements, and analyze these requirements against established frameworks (NIST CSF, ISO 27001, PCI DSS, etc).

The agent identifies gaps by comparing your current policy against framework requirements:

“Your authentication policy mentions passwords but doesn’t specify MFA requirements. NIST CSF PR.AC-7 requires multi-factor authentication for remote access.”

Step 2: Recommendation Generation and Prioritization

Based on identified gaps, the agent generates specific recommendations ranked by priority and confidence scores. High-priority, high-confidence recommendations might include:

“Implement multi-factor authentication for all remote access” (Priority: 11, Confidence: 0.75)

“Establish privileged access management with time-based controls” (Priority: 10, Confidence: 0.80)

“Enforce password complexity requirements” (Priority: 7, Confidence: 0.85)

The agent can then re-write the policy with the included changes and trigger a review with a human. Depending on the organization, you can decide whether the agent needs to wait for human approval before moving to the next step, or you can allow the agent to generate the OPA code.

Step 3: Rego Policy Generation

After the agent analyzes each recommendation to determine if it’s a candidate for automated enforcement through OPA Rego policies it generates the appropriate Rego code and prepares it to be deployed to OPA. For “Implement multi-factor authentication,” the agent:

Selects the appropriate policy template (MFA enforcement)

Generates Rego code that evaluates authentication requests

Creates test cases to validate the policy logic

Packages everything into a deployable policy bundle

The generated mfa_enforcement.rego policy looks something like this:

rego

package authz.mfa

default allow = false

allow {

input.user.mfa_enabled == true

input.user.mfa_verified == true

}

Step 4: Automated Deployment to OPA

The agent can then deploy the generated policy(s) to your OPA server, making them immediately enforceable. Policy bundles are versioned, allowing rollback if needed and a deployment report can be created that shows:

Policies deployed and their endpoints

Test results confirming policy logic

Integration points with your existing security infrastructure

Step 5: Real-Time Enforcement

Now when a user attempts to authenticate, your authentication service queries OPA with the authentication context. OPA evaluates the request against your MFA policy and returns a decision: allow or deny, with detailed violation information if denied.

Here is a quick and dirty mockup of this workflow:

Written policy is now an active security control. Change the policy document, re-run the agent, and enforcement updates automatically. Have a new policy to write? Add it to your repository and let the agent validate and codify it.

Things to Consider

I’ve been working on a proof of concept and have this working locally in my environment (queue the “works in my environment” jokes) and I plan to have this available more broadly soon. But here are a few things I found while attempting this workflow with an agent:

Accuracy and Coverage: Not every policy statement can be automatically converted to Rego. In practice, about 60-70% of typical security policy recommendations map cleanly to enforceable controls. Authentication, authorization, data access, and configuration management policies work well. Organizational policies about security awareness training or vendor management require human oversight.

Template Library: While the agent can create new Rego code, it’s better to maintain a library of Rego policy templates for common patterns such as MFA enforcement, privileged access management, data classification controls, password policies, and session management. When the agent identifies a recommendation matching a template, it can then customize that template.

Validation and Testing: Every policy should include test cases that validate whether the generated Rego compiles correctly and that test cases pass. This catches logic errors in a lower environment before they impact production.

Integration Challenges: OPA deployment requires coordination with your authentication systems, API gateways, Kubernetes admission controllers, and other enforcement points you’re targeting. The agent can generate the policy logic, but you still need to wire up those integration points.

Human Review Remains Critical: Generated policies get committed to version control via pull requests, allowing security teams to review before deployment. High-confidence, well-tested patterns might auto-merge to development environments, but production deployments should require human approval initially.

We know that the security industry is resource and budget constrained. And while we’ve spent a lot of time working on automation, AI agents can take automation to a new level enabling faster reaction time and more even application of security controls. Instead of scaling human processing capacity, we’re automating the processing layer entirely. This can solve the gap between threat velocity and human response capacity.

If you’re looking to create or bring agents into your cybersecurity program start by assessing where your biggest pain points exist, select pilot use cases with high volume and low ambiguity, and measure relentlessly. Begin with commercial platforms to build expertise, then graduate to custom solutions as you understand the operational reality. Yes, humans remain essential for strategic decisions, complex investigations, and ethical judgment, but routine operations that consume the bulk of of your team’s time can and should be passed to agents.

This is not about replacing people but reducing the workload on already overworked and resource constrained teams.